Serendipity in Future Music Creation【Producer Scott Young】

November 21, 2023

This is the third installment in a series featuring the voices of musicians who use Neutone, an AI-based real-time timbre transfer plugin developed by Neutone.

In September 2023, Scott Young visited the Neutone office to provide feedback to the development team.

Below is his commentary on the album ‘A Model Within,’ which he created using Neutone and was featured in an Ableton interview. It also includes his perspectives on the future of the music industry.

How he came to use Neutone in “A Model Within”

Last year, while working on a project, my friend Eames mentioned using Machine Learning to speed things up a bit. So I started checking out IRCAM’s RAVE algorithm. I tried to train a model from scratch using Google Colab, but the results were less than ideal, as the sample size was small and I only trained it for a few days on a virtual machine with an A100.

During my research on the IRCAM forum, I discovered Neutone, which not only utilizes the RAVE algorithm but also incorporates the DDSP technique. The preset models were so impressive that I eagerly began creating tunes with them. DDSPXSynth model offered four parameters to manipulate: IR Convolution Reverb, pitch control, noise, and formant control. In my project ‘A Model Within,’ I processed various audio sources by manipulating these parameters, including a Phaseplant patch I created, a voice memo of my daughter, and the sounds of the Diana Memorial Fountain in Hyde Park.

The results were mind-blowing — it sounded like an album played by a real person, and you could definitely feel the emotional impact. I think when using this generative approach, the key as a human creator is to know where to trim and connect the pieces into something meaningful that resonates with us.

Other plugins used

During the mixing stage, the plugin’s output often comes across as dry and flat. To address this, I started off by using Devil-Loc Deluxe from Soundtoys to add a bit of crunch to the flat and digital output — just a sprinkle of Crush and Darkness.

The output is usually mono, but I made it sound like stereo using Kilohearts’ HaaS plugin. Then I used Align Delay to adjust the phase just how I wanted it.

Next, I put on some gentle compression before sending the mix off to mastering. I count on the Lindell SBC to tighten the dynamic range and get a well-balanced mix.

To top it off, I add a hint of EQ using the Lindell TE-100, which mimics the tube-driven EQ of the Klein & Hummel UE-100. Both of these fantastic plugins are products of Plugin Alliance.

Inspiration in editing methods

In my approach, I draw inspiration from the traditional methods of editing jazz records. Back in the day, we would physically cut and splice tape to merge multiple takes into a seamless sequence. By identifying particularly engaging segments, we could assemble them into what appears to be a single, cohesive live performance.

I incorporated two voice memos of my daughter into the saxophone performance, arranged in sequence. Surprisingly, the outcome still resonates as a coherent free jazz interpretation.

Goals in Mastering

I began by utilizing the pre-built models from the plugin, and then enhanced them with a classic compressor and equalizer. Next, I moved on to the mastering stage, where I fine-tuned the sound’s tone and shape. This process allowed me to blend digital artifacts with the processed instruments, ultimately creating a unique and polished result.

My goal for mastering this record was to preserve the unique characteristics of the models while enhancing the system artifacts to a comfortable playback level.

In essence, we embarked on a journey to restore the timbre associated with the model names and play with the digital artifacts. Roc (EVOL) joined me in this process. For instance, on the final track ‘Kora,’ we experimented with making the instrument sound more plucky, which in turn, brought the artifacts more to the forefront. Once we defined the distinct qualities, we focused on boosting the overall fullness of the sound.

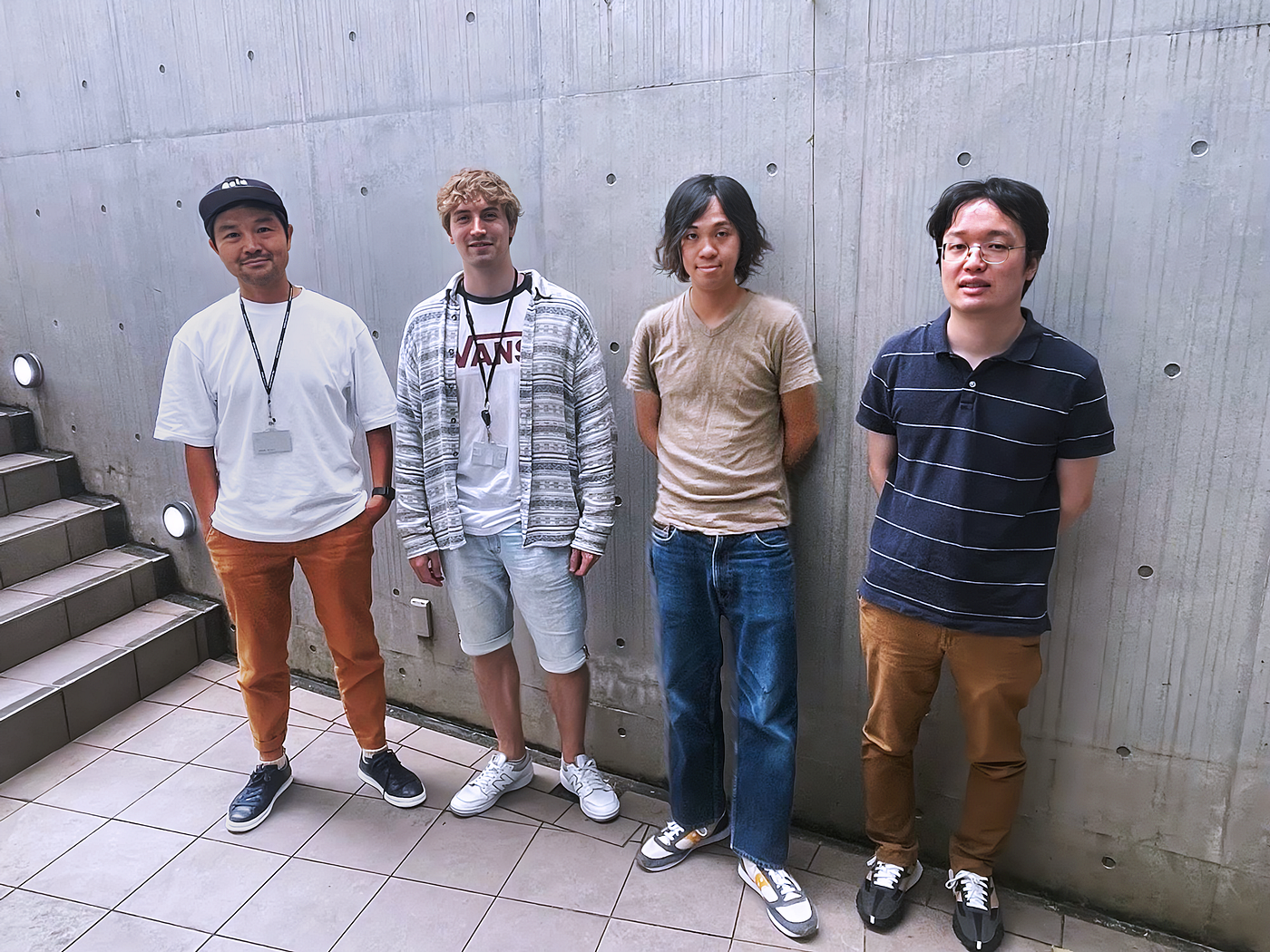

From left to right: Naoki Tokui, Andrew Fyfe, Scott Young, and Naotake Masuda The rapid development of machine learning today

Machine learning has really taken off in the last couple of months, and GPT-4 is making quite an impact. It’s super handy for handling time-consuming tasks with just a simple prompt. Like, imagine asking GPT-4 to run ffmpeg and snip the first 5 seconds of a video — pretty cool, right?

And soon, we might be able to do the same with audio! Transposing a clip, detecting a sample, or even removing certain timbres could all be possible in the near future. The best part? All it takes is a prompt, so you won’t need fancy software or a powerful machine.

The Future of Music Production

Music-making in the future could be increasingly remote and less dependent on in-person collaboration.

In the past, our musical performances were shaped by muscle memory developed through extensive practice, or by pushing the boundaries of our training. However, it’s likely that these patterns already exist somewhere.

With this innovative approach, musicians can record as many takes as they desire, and these takes can be used to train a model. Producers can then select their preferred models to play on a sequencer, and edit the results based on their takes. These takes can be creatively stitched together into something fresh and unique.

As the output is always evolving, the element of serendipity in music creation becomes even more pronounced.

Message to Neutone

I couldn’t have created this album without the support of Neutone team. Their open-source project, Neutone, opened up the possibilities for the public to tap into the potential of making music through timbre transfer. They offer Google Colab notebooks to help users train their own models, and their Discord community is active and helpful in case you encounter any issues. The constant updates and contributions from this vibrant community make all of the exciting developments in this field possible.

Acknowledgment

Finally, a massive thank you to Christian Di Vito from SUPERPANG and my wife Annie, they both believed in my vision. All of the tunes were complete when I sent them out. Regardless of how innovative this kind of music might be, what truly matters is that our emotions respond with ‘it sounds really nice!’

Text: Scott Young, Tatsuya Mori

Back